Consequential Week for Artificial Intelligence

It's been a consequential week for artificial intelligence: Google released its newest A.I. model, Gemini, which beat out OpenAI's best technology in some tests.

But some, including Pope Francis, who was shown in fake A.I. photos that went viral earlier this year, have become hesitant about an A.I. arms race. On Thursday, the pope called for a binding international treaty to avoid what he called "technological dictatorship." But as artificial intelligence becomes more powerful, the companies building it are increasingly keeping the tech closely guarded.

One exception is Meta, the company that owns Facebook and Instagram. Meta says there's a better and fairer way to build A.I. without a handful of companies gaining too much power. Unlike other big technology labs, Meta publishes and shares their research, which Joelle Pinnot, the leader of Meta's Fundamental A.I. Research group - or FAIR - said differentiates them.

FAIR, the organization that developed Pytorch, a crucial coding infrastructure for modern artificial intelligence, has made the program open-source. This means that the program is now available to the public and can be accessed and modified by independent developers. Meta, the parent company of FAIR, hopes that this open approach will help them compete with tech giants like Google and Microsoft.

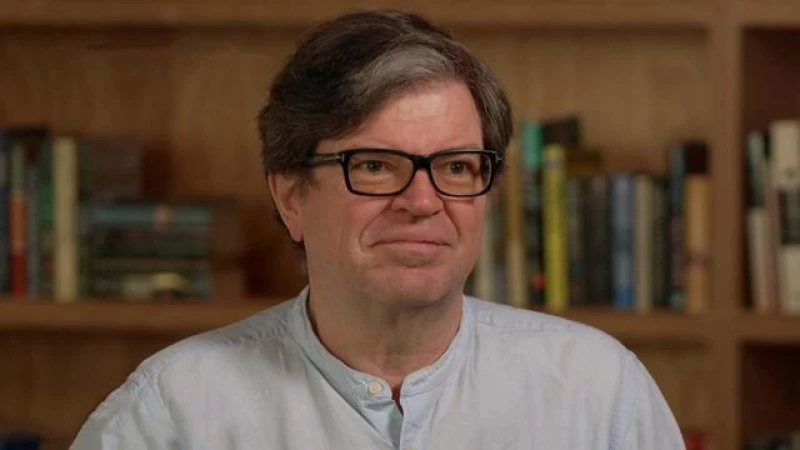

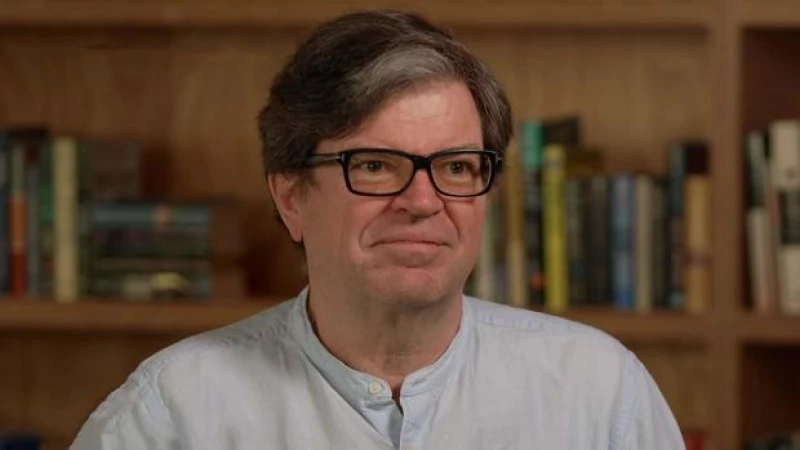

Yann LeCun, the chief A.I. scientist at Meta and the pioneer behind Pytorch, has always been a strong advocate for open research. He believes that collaboration and sharing knowledge is essential for scientific progress. LeCun was hired by Mark Zuckerberg to start FAIR in 2013 and has been instrumental in advancing the field of artificial intelligence.

Pytorch was developed by a small group of scientists, including LeCun, who were among the few working on neural nets at the time. The program has since become a fundamental tool for AI researchers and developers.

Meta's push for open source development

Geoffrey Hinton, who spoke to CBS Saturday Morning about A.I. in March 2023, and that little band of upstarts were eventually proven right. In 2018, Hinton, LeCun and Yoshua Bengio, all so-called "Godfathers of A.I," shared the Turing Award for their groundbreaking research.

After being hired to Facebook by Zuckerberg, LeCun's A.I. work helped the social media site recommend friends, optimize ads and automatically censor posts that violate the platform's roles.

"If you try to rip out deep learning out of Meta today, the entire company crumbles, it's literally built around it," LeCun explained.

However, the dynamic is changing fast. A.I. isn't just supporting existing technology, but threatening to overturn it, creating a possible future where the open Internet with its millions of independent websites is replaced by a handful of powerful A.I. systems.

"If you imagine this kind of future where all of our information diet is mediated by those AI systems, you do not want those things to be controlled by a small number of companies on the West Coast of the U.S.," LeCun said. "Those systems will constitute the repository of all human knowledge and culture. You can't have that centralized. It needs to be open."

The Debate on the Risks of Artificial Intelligence

There is a growing debate surrounding the potential dangers of artificial intelligence (A.I.). While some experts, like LeCun, argue that A.I. should be publicly accessible due to its transformative nature, others express concerns about its potential dangers and advocate for a more controlled development process. Even prominent figures in the field, known as the "Godfathers of A.I.," have differing opinions. Hinton warns about the possibility of A.I. "taking over" from humans, while Bengio calls for regulation of the technology. On the other hand, LeCun believes in open sourcing and dismisses Hinton's theory, considering the idea of A.I. wiping out humanity as science fiction.

LeCun states, "It's less likely than an asteroid hitting the earth or global nuclear war." He believes that the disagreement stems from one's faith in humanity. LeCun trusts global institutions to ensure the safety of A.I., while Hinton worries about repressive governments utilizing robot soldiers for atrocities. Interestingly, LeCun sees potential benefits in the use of autonomous weapons, citing Ukraine's use of drones with A.I. integration as an example. He argues that regardless of one's opinion on the matter, it is a necessity. LeCun concludes by posing the question of who will possess superior technology in the world: the good or the bad guys.

Robots in the Household: The Future of AI

Armed with a mix of optimism and inevitability, experts in the field of artificial intelligence (AI) are opposing regulation on basic AI research. One such expert is Yann LeCun, who is focused on developing robots that can perform basic household tasks. While LeCun acknowledges the potential risks of super intelligence, he believes it is still far off in the future.

Dhruv Batra, who oversees Meta's robotics lab, is currently working on creating a helpful and autonomous domestic robot. Although Batra and his team have made significant progress, he admits that it will be a long time before robots are capable of fully managing household tasks for the general public. According to Batra, there is still a significant amount of common sense knowledge that robots and AI systems lack.

As humans continue to pursue advancements in AI, they are gradually teaching robots the necessary skills for superhuman intelligence. However, Yann LeCun believes that this new force will not replace humans, but rather empower them. He emphasizes the importance of implementing AI technology responsibly and mitigating any potential side effects, but believes that the benefits of AI far outweigh the dangers.